An Advanced AR/VR Data Integration Application for Meta Quest 3 - a Technical Overview of SkyLens

SkyLens is an innovative Augmented Reality (AR) and Virtual Reality (VR) application developed for the Meta Quest 3 headset. It is designed to revolutionize real-time data visualization in 3D space. This platform integrates multiple data sources, including flight tracking, weather information, satellite positions, and ground topography, to create an immersive and interactive environment.

Key Features of SkyLens

• Real-time data integration from various sources, providing up-to-date information based on the user's GPS location.

• Advanced user interaction mechanisms enable controller-free operation, including gaze tracking, hand gesture recognition, and voice command integration.

• A unique deformable navigation bubble for intuitive movement through the virtual space.

• Sophisticated data overlay rendering and heads-up display (HUD) architecture for seamless information presentation.

• Integration of comprehensive satellite data, weather information, live aviation data, and ground topography for enhanced realism and functionality.

While we developed SkyLens, our team addressed several technical challenges inherent in creating advanced AR/VR applications - including real-time data synchronization, maintaining 3D spatial accuracy, and ensuring interaction responsiveness. By developing custom-built data pipelines, dynamic depth-scaling algorithms, and optimized machine learning models, we not only solved our internal technical hurdles but also created a potential blueprint for other developers facing similar obstacles.

SkyLens demonstrates the potential of AR/VR technology in transforming complex data visualization, serving as both a practical tool and a case study for academic research in data science, computer graphics, and human-computer interaction.

Pushing the Boundaries of AR/VR

Data is complicated. Visualization can help, but traditional methods fall short. Large-scale enterprises often have vast repositories of data, typically surfaced as spreadsheets or, at best, dashboards with graphs and trendlines. However, these formats require analysts to mentally transform numbers and charts into actionable insights, a process that can be time-consuming and prone to misinterpretation.

SkyLens pushes the boundaries of data visualization by contextualizing information in three-dimensional spaces. By overlaying dynamic data from various external sources in a 3D environment, users can effortlessly explore spatially contextual information. Instead of presenting data in cells or charts, SkyLens places the data next to relevant real-world objects, making it tangible and immediate.

The Meta Quest 3 headset allows a user to experience stereoscopic 3D in VR and AR, providing a rich, immersive visual experience. By utilizing the headset’s network location, we determine the GPS location of the user and retrieve data relevant to that location, including:

• Topography

• Satellite imagery

• Highway and bridge geometry

• Building geometry and texture data

• Weather conditions

• Aircraft (in-flight and on the ground), and

• Satellites visible from that location

This approach offers a more intuitive and immersive alternative to traditional web browsers for accessing and interpreting location-based information. Gathering and visualizing this spatially contextual information through conventional means would be time-consuming, awkward, and primitive in comparison.

.png?width=3823&height=1914&name=image%20(15).png)

SkyLens enables virtual teleportation, allowing users to explore real-time data anywhere on the planet. With a simple voice prompt users can seamlessly transition from exploring real-time flight patterns over Rome to analyzing space debris orbiting above Paris, all within seconds.

The system effortlessly renders intricate 3D models of satellites alongside dynamic weather patterns and live air traffic data, creating a rich, multi-layered visualization that spans from ground level to low Earth orbit. This immersive environment allows users to interact with and manipulate diverse datasets simultaneously, from granular local information to vast global systems, all within a single, fluid interface.

The following sections will delve into the technical architecture, data integration methodologies, and innovative user interaction frameworks that enable these advanced features, revealing the engineering solutions behind SkyLens' transformative capabilities.

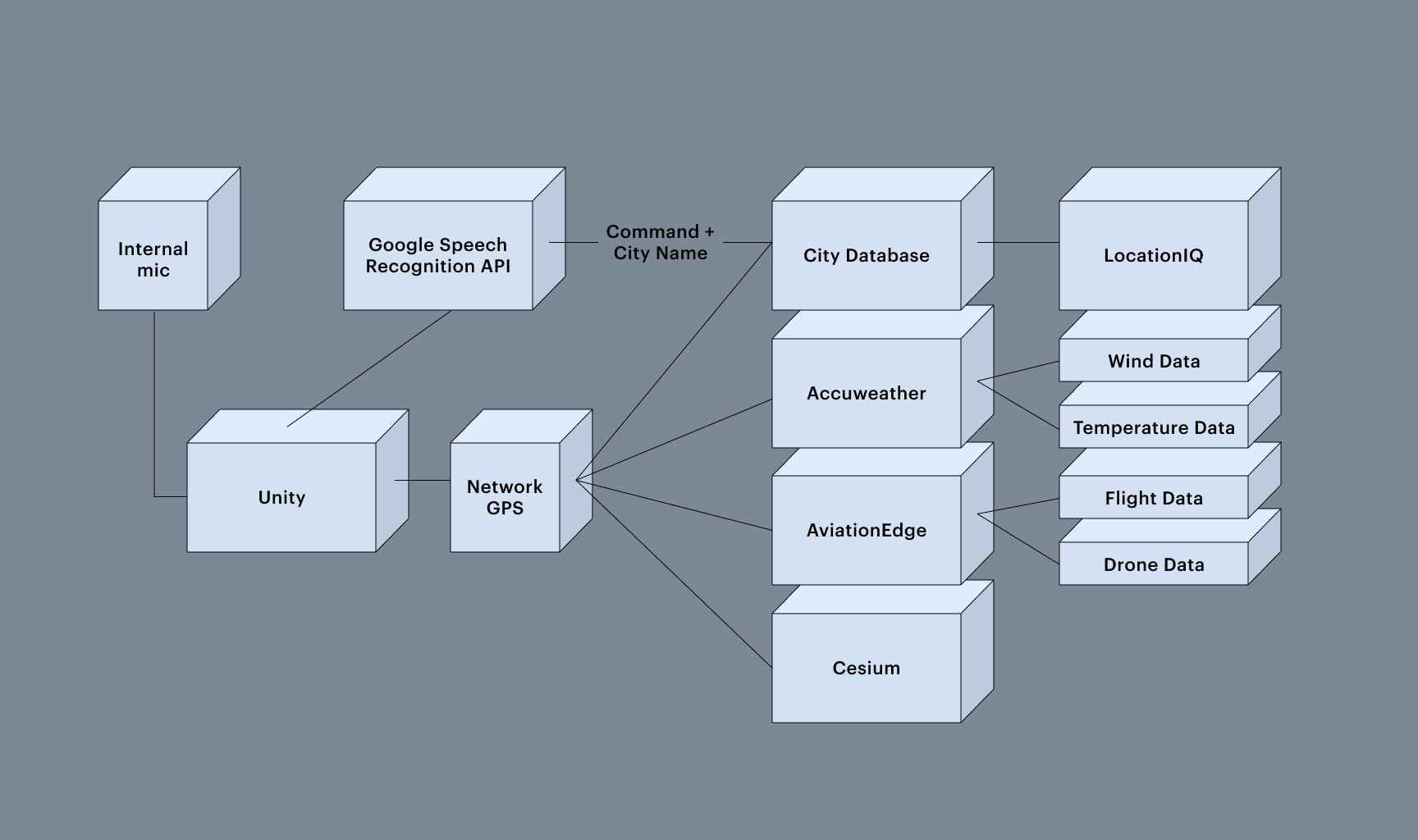

System Architecture and Data Integration

At the heart of SkyLens is a system architecture designed to handle vast amounts of real-time data while delivering a seamless AR/VR experience. This section explores data pipelines, processing algorithms, and integration methodologies that form the backbone of SkyLens. We'll examine how these components work together to turn raw data into an immersive, interactive environment.

Real-Time Data Sourcing and Integration

SkyLens integrates data from multiple real-time sources, updating dynamically to provide the most current information. Key data integrations include:

Flight Data

SkyLens uses aviation tracking data to present real-time aircraft positions, trajectories, and details for flights in the user’s vicinity.

Weather Information

Sourced from global weather services, providing accurate local weather overlays, including temperature, precipitation, and wind patterns.

Satellite Tracking

Data provided by organizations such as the Union of Concerned Scientists (UCS) enables real-time visualization of satellites, both active and inactive, in orbit.

Ground Topography and 3D Building Data

Accurate representations of terrain and structures, creating a realistic virtual environment.

Astronomical Calculations

SkyLens determines the real-time positions of celestial bodies, such as the Sun and Moon, using the user's GPS data for spatially accurate visualizations.

User Interaction Framework

SkyLens offers advanced interaction mechanisms, eliminating the need for traditional controllers. The system supports:

Gaze Tracking

Users can interact with data points through gaze, enabling easy selection and retrieval of information.

Hand Gesture Recognition

Hand movements detected by the headset’s sensors allow users to navigate and manipulate the VR environment.

Voice Command Integration

Speech recognition facilitates hands-free interaction, enabling users to issue commands or request information in natural language.

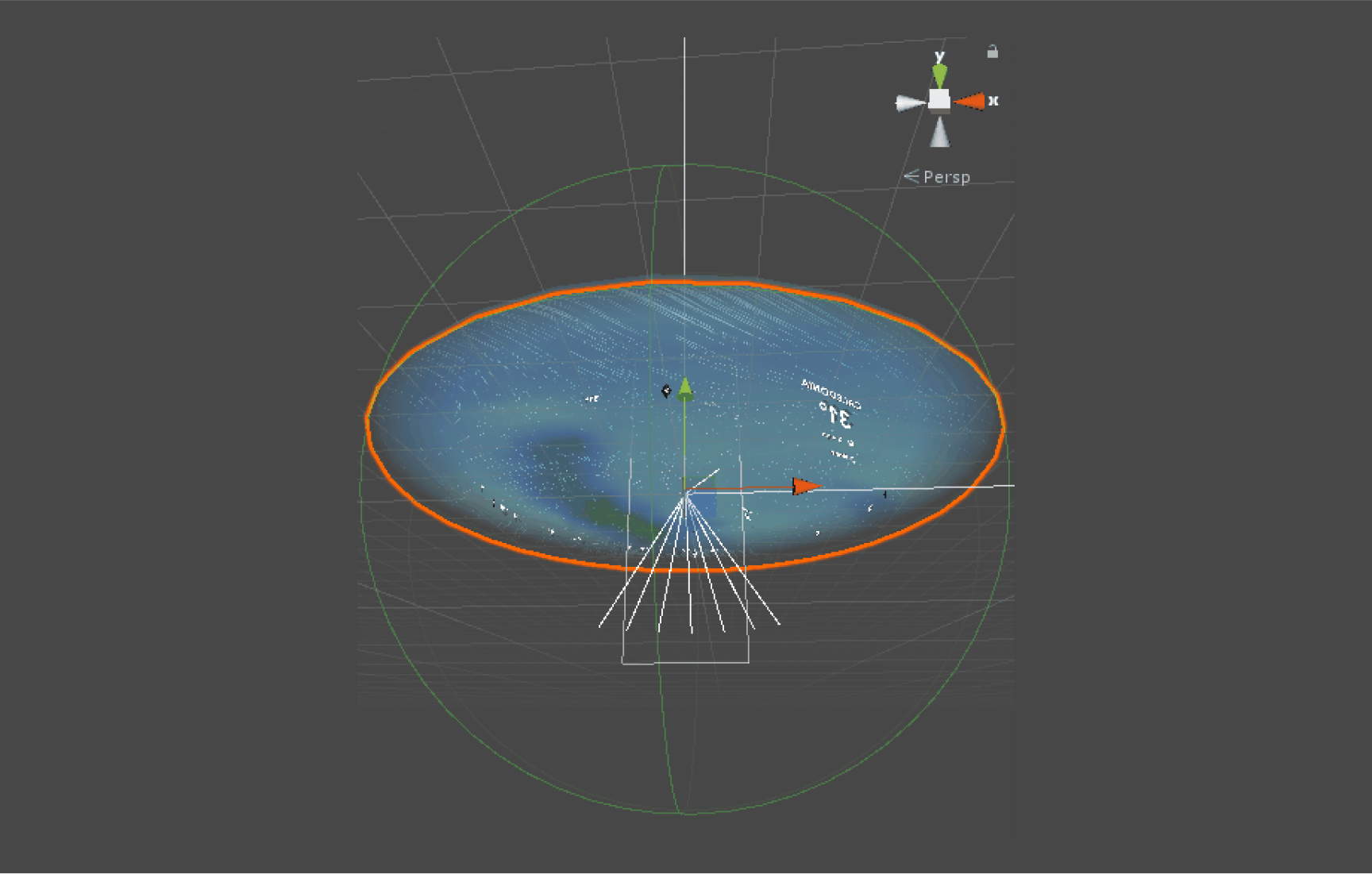

VR Space Navigation

SkyLens employs an interactive, deformable navigation bubble to facilitate movement through the virtual environment. This bubble responds to hand movements, allowing users to push, pull, or manipulate it to navigate the VR space. Unlike conventional controls, the bubble deforms in response to the user's inputs, providing a tactile and intuitive method for traversing the virtual environment without requiring external hardware.

With a solid understanding of SkyLens' architecture, we can now explore the diverse data sources that fuel this powerful system. The following section will highlight how we integrate and process various types of information to create a dynamic AR/VR environment.

Data Sources and Integration Methodologies

SkyLens uses a diverse set of data sources to create its multifaceted AR/VR environment. From satellite positions to real-time weather patterns, each data stream presents unique challenges in acquisition, processing, and integration.

This section delves into the specific data sources utilized by SkyLens and the methodologies employed to blend assorted data types into a cohesive user experience.

Satellite Data

SkyLens incorporates comprehensive satellite data from the Union of Concerned Scientists (UCS) Satellite Database. This database provides real-time tracking and positional information for over 5,000 satellites in orbit, including both operational and inactive satellites. The data includes satellite identification, operational status, orbital parameters, and classifications, enabling accurate rendering in the AR/VR environment.

Weather Information

SkyLens accesses weather data from multiple meteorological services via APIs, delivering hyper-localized weather information, including temperature, precipitation, wind speed, humidity, and atmospheric pressure. This data is updated in real-time to provide accurate overlays in the user’s environment.

Flight Data

Live aviation data is sourced from flight tracking services, providing real-time updates on commercial, private, and military aircraft. Data fields include aircraft type, altitude, speed, heading, and origin/destination, which are visualized in the AR environment for enhanced situational awareness.

Ground Topography and Building Data

SkyLens integrates topographical and building data to render accurate terrain models and structures within the VR environment, ensuring spatial consistency and realism for users exploring their surroundings.

Astronomical Calculations

Astronomical data is generated using standard ephemeris algorithms to determine the precise positions of celestial bodies relative to the user’s location. This ensures that elements like the Sun and Moon are spatially consistent within the AR experience.

Having examined the data that drives SkyLens, we will look at how users interact with this wealth of information. The next section showcases the innovative user interaction frameworks that make SkyLens informative, immersive, and intuitive.

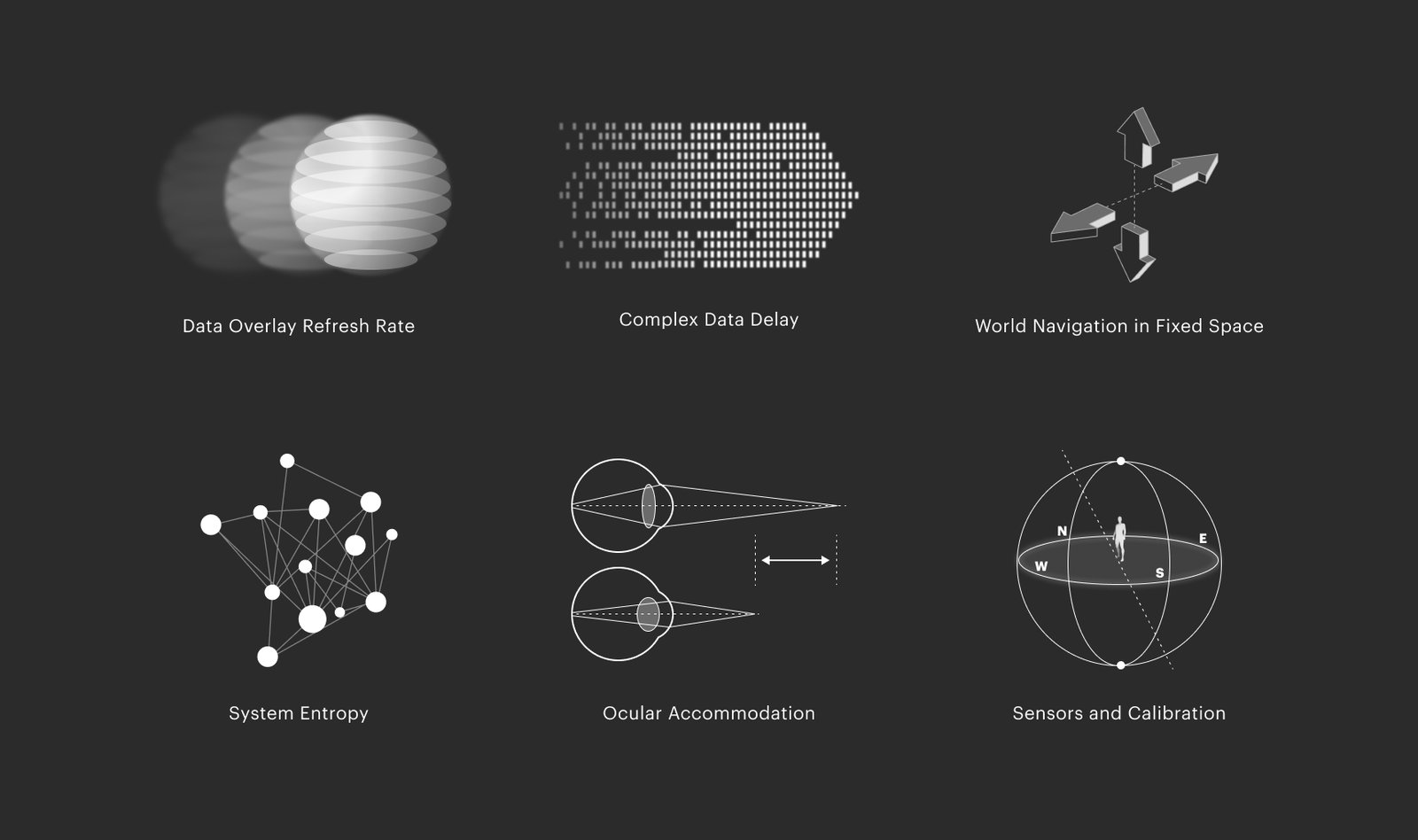

Technical Challenges and Performance Optimization

Developing SkyLens for the Meta Quest 3 presented several complex technical challenges that required innovative solutions to ensure a seamless, responsive, and immersive AR/VR experience. This section outlines the key challenges faced during development and the solutions we developed.

Real-Time Data Synchronization

SkyLens manages real-time synchronization of multiple data sources with varying update frequencies. A custom-built data pipeline with a multi-threaded architecture ensures seamless data integration, providing smooth and responsive updates to the AR/VR environment.

3D Spatial Accuracy

Ensuring accurate depth and spatial consistency in the AR/VR environment required precise calibration. SkyLens employs dynamic depth-scaling algorithms that adjust the rendering of objects based on real-world distances and the user’s perspective, ensuring a high degree of realism.

Interaction Responsiveness

Achieving responsive interaction required optimizing hand tracking and gaze detection algorithms. SkyLens uses hardware acceleration and machine learning models to ensure that user inputs are registered promptly, resulting in a highly interactive experience.

User Interaction and XR Design

The true power of SkyLens is in more than just its data visualization capabilities; it is how users interact with and navigate its data environments. This section explores the interaction paradigms implemented in SkyLens, from gaze tracking to gesture recognition. We'll examine how we combined these technologies to create an intuitive, immersive interface.

Navigation Bubble Mechanics

The navigation bubble serves as the primary method for moving through the VR environment. This deformable bubble reacts to the user's hand movements, enabling smooth navigation. When pushed or pulled, the bubble deforms and shifts, propelling the user through the virtual world. This controller-free approach ensures intuitive navigation and leverages natural hand gestures for movement.

Gaze Tracking System

Eye-tracking sensors embedded in the Meta Quest 3 headset facilitate gaze-based interaction. By focusing their gaze on objects, users can select data points or activate HUD elements, simplifying interaction within the AR/VR environment.

Speech Recognition Integration

SkyLens incorporates speech recognition, allowing users to interact verbally with the application. Natural language processing supports conversational commands, enabling efficient, hands-free operation.

Heads-Up Display (HUD) Architecture

The HUD system in SkyLens is designed to present object-specific information without obstructing the user's field of view. Dynamic scaling ensures that data is displayed appropriately, matching the user's focus and maintaining an uncluttered interface.

Data Overlay Rendering

Data overlays are rendered using spatially aware algorithms that align data points with real-world counterparts. This ensures accurate placement and enhances the realism of the AR/VR experience.

Transforming Data Visualization: The Sky Is Not the Limit

As we continue driving tech-forward, SkyLens delivers a sophisticated AR/VR experience that shows the transformative potential of AR/VR technology in data visualization and interaction.

By blending real-time data with intuitive user interfaces, SkyLens enhances our ability to understand complex information and paves the way for new applications across industries. From disaster management to urban planning, the possibilities feel endless.

So we continue refining and expanding SkyLens. The next iteration will include new capabilities, like the ability to overlay new map types and collaborate together spatially. SkyLens stands out as a cutting-edge application for data visualization in AR/VR and will continue transforming how users visualize and interact with real-world data in their environments.